My joint work, published online in Applied Mathematics and Optimization doi:10.1007/s00245-020-09718-8, proves rigorously that Backtracking Gradient Descent (GD) is the best optimisation method, concerning convergence and avoidance of saddle points.

The implementation of Backtracking GD performs better than the methods mentioned in the previous paragraph, on datasets CIFAR10 and CIFAR100 (later replicated by a different group in arXiv:1905.09997) . My joint work arXiv:2006.01512 proposes a new modification of Newton's method which can avoid saddle points while still fast.

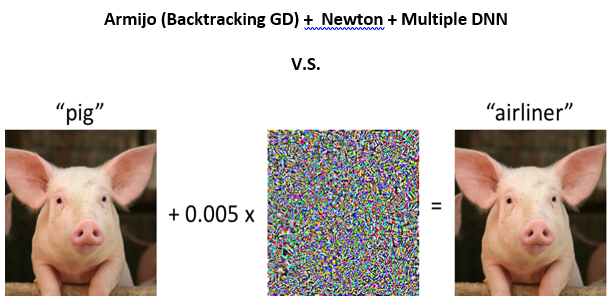

This research project is to explore whether Backtracking GD can help with resolving one important issue: Adversarial attacks. We will combine this with using many different DNN. Both experimental and theoretical research will be pursued. Since current adversarial attacks use gradient descent, when possible the new modification of Newton's method mentioned above will be explored for this purpose.

Requirements

- A Master’s degree in Mathematics (one of the fields Optimisation, Dynamical Systems or Random Processes is preferred) and/or Deep Learning/Machine Learning is requested.

- Documented skills in research or programming (such as online preprints/papers, codes on GitHub) are a plus.

Supervisors

Associate Professor Tuyen Trung Truong

Adjunct Professor Anders Christian Hansen

Call 2: Project start autumn 2022

This project is in call 2, starting autumn 2022.