The problem: In an increasingly “technologified” world, we may worry whether increased use of a home office laptopduring a lockdown has predictable, specific, negative (and potentially avoidable) outcomes like back or neck pain. Working out at the gym, we may worry whether an exercise actually helps us get fitter or just makes us tighten up more. Body-worn sensors, sensors in furniture, clothes and floors can help to measure functioning and well-being, improve product design, create better work and exercise routines. However, without a good model of us, the users, the value of those data is limited. It is hard then to say, with any degree of certainty, that sitting in a certain way is bad for your posture, or that a chair is poorly designed.

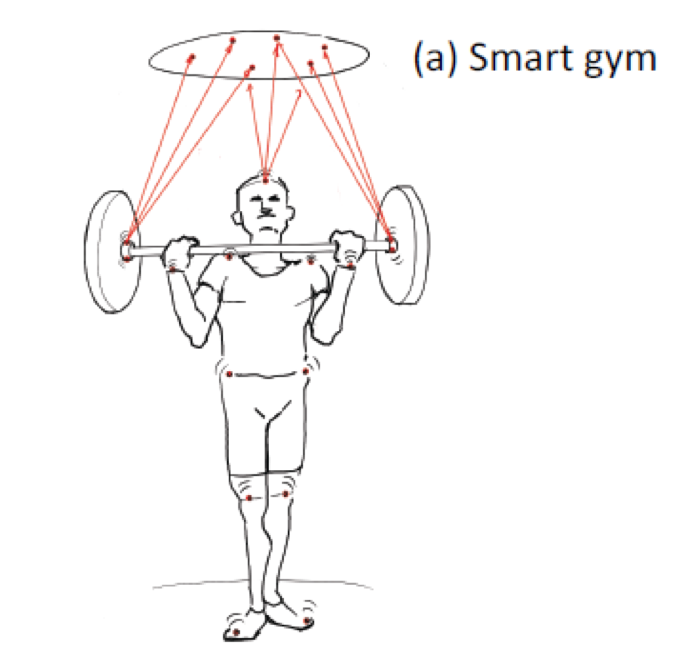

One of the use cases is to develop a Smart Gym solution with our project partner SATS. The idea is to get absolute motion positions for gear and body parts, as opposed to the relative motion provided by typical IMU solutions.

To do this, we aim to leverage a new sensor technology – so-called PMUTs – piezo-electrical micromechanical elements – that are currently being commercialized through a new SINTEF startup company (Sonair).

Specifically, each "beacon" consists of a PMUT, a driver circuit and a small battery – a solution to be designed and assembled. The total device would use some kind of adhesive to glue to beacon to a body or gear part. One can also consider using a powering solution which could be charged with induction. As an example, all the beacons could be put into an inductive charger "cup" to be repowered.

The driver could be a MCU such as a small Arduino-compatible device – the smaller  the better. The Arduino Pico is one option (see picture) Previous work has shown that the output signal can be a chirp signal coded as a pulse-train, and that no additional amplification is needed on the output signal.

the better. The Arduino Pico is one option (see picture) Previous work has shown that the output signal can be a chirp signal coded as a pulse-train, and that no additional amplification is needed on the output signal.

On the receiving side, we envision a disc-like, ceiling mounted device having multiple MEMS microphones as receivers. The ideas is that all the beacons are observable (i.e. not occluded) from at least 4 MEMS microphones. This enable us to compute the 3-dimensional position and additionally, the unknown time-lag between the clock of the MCU and the clock of the ceiling-mounted disc.

At the final stage, the positions of the beacons are used as input to the AI model which is the main aim of the project MAXSENSE.

MAXSENSE will use hybrid physics-AI techniques to develop human anatomical avatars from 3D multi- camera video-streams. The avatars will subsequently be combined with wireless sensors to model and predict parameters like muscular toneand tension distribution. We apply the avatars to real-life cases like personalized training, workspace design and human-computerinteraction, with guidance from our industry partners.