InteractionFIRST!

Hi, here it is Svein (PhD candidate at IFI) and Jo Herstad (IFI) playing this path from July 2021.

This yellow design brief is called InteractionFIRST! and refers to an approach to first and foremost regard the interaction(s) as a desired output of technology (products, processes, systems).

Applied to developing technology, such as a sustainable mobile phone here, this approach calls for employing an “output pull” rather than an “input push”. That means interactions with, on, and through the mobile phone are put in first place and determine furthermore what input (of energy, materials, and technical solutions) is necessary. Here this approach is applied to discover sufficient and sustainable design solutions via the interactions as output pulls.

Step I

INSPIRE

Step PR

PROVOKE

Let’s begin with the “provocation” that this InteractionFIRST! design brief poses: regard the interaction first, i.e. not the technology, hardware etc., but the soft matter, i.e. the use(r), the interface, information & communication, etc.

“The digital interface […] stands for a gateway to the underlying “elements of the immaterial, the invisible and the non-sensual”, which are “gain[ing] importance [in design and even] create a new culture of design” [Folkmann, 2012]. Since such invisible soft matter […] “fundamentally structures the functions, usability and character of the object” [Folkmann, 2012], it plays a vital role in the use phase […].” (Folkmann, 2012; Junge, 2021)

The output pull approach is derived from and known as “Principle 5: Products, processes, and systems should be “output pulled” rather than “input pushed” through the use of energy and materials.” (‘Peer Reviewed’, 2003). The intent with using it in this brainstorming and workshop process is a.o. to foster transdisciplinarity in the field of sustainable design that in case of the mobile phone unites product design, interaction design, computer science, behavioural science, material science, and more. The following citation is taken from a product design meets material science context and transformed to fit interaction design and the mentioned soft matter, forwarding a very important, generally valid, message:

“Given the growing interest in “upstream” collaborative projects between designers and [materials scientists engineers/programmers], it is crucial to scrutinize designers’ creative contribution to [materials intangible materials (interactions, code, etc.)] development beyond “coming up with” application ideas. […] Creativity [understood as being distributed between the designer and the material world] requires designers’ active participation in “discovering” the novel potentials of [materials intangible materials] rather than merely translating the “given” materials information to [product] applications." (Barati & Karana, 2019)

With this brief we want to foreground interactions in an effort to re-design the mobile phone. Along the way you might even exercise a bit in undesigning (Coombs et al., 2018; Pierce, 2012; van der Velden, 2010), asking either what interfaces/interactions would still be possible or remain, or how would the interaction (have to) change due to an introduced “disfunctionality”.

Feel free to use any of the note-taking techniques offered through the brainstorm journal templates (docx, doc, pdf) for keeping a diary/journal about your thoughts and ideas from here on!

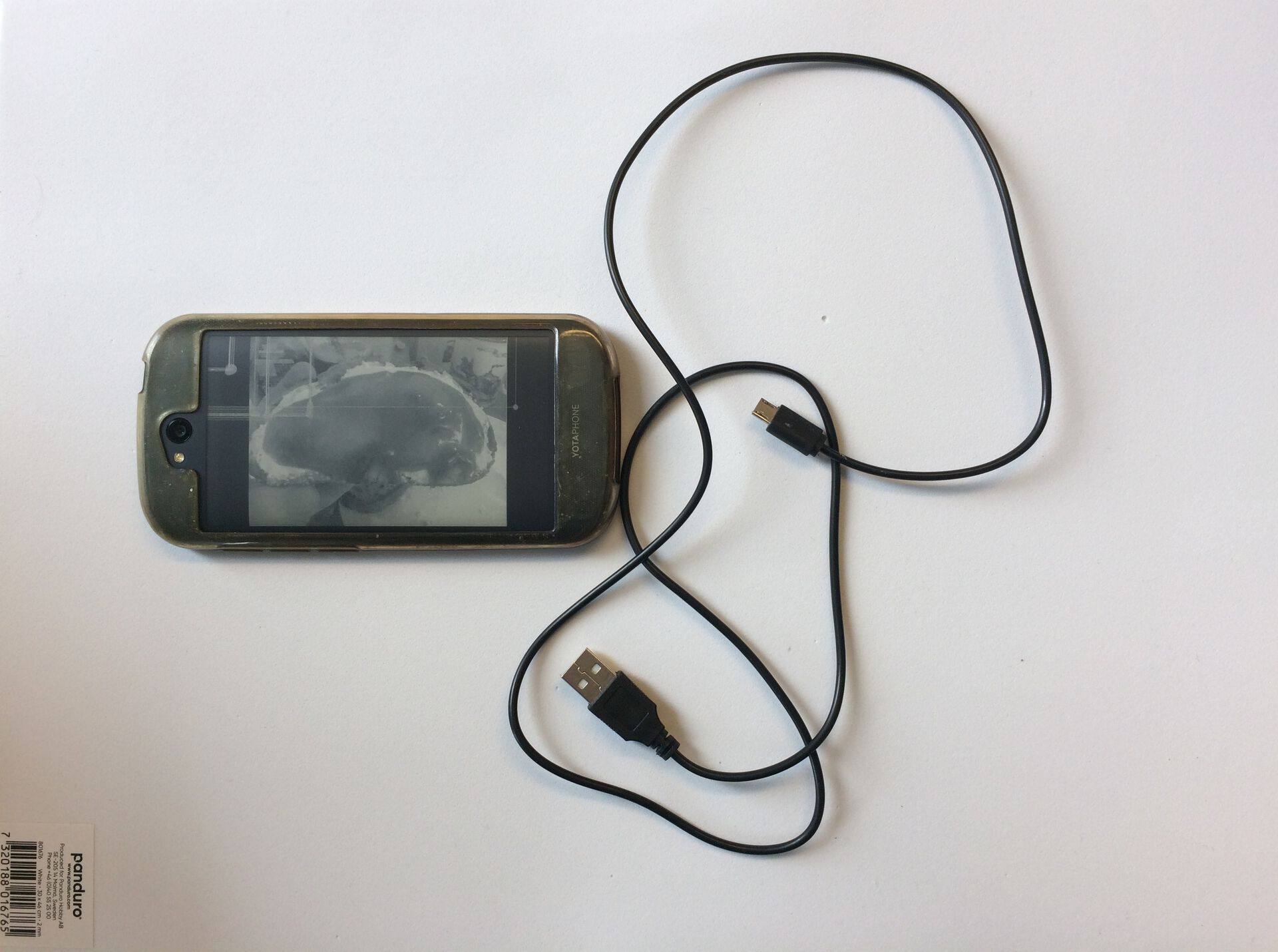

E-ink display technology has early been mentioned in the sustainable technology design literature (Tischner & Hora, 2012) and found its way into phone models, such as the Russian-made Yotapone (ca. 2013-2019, see (PhoneDB - The Largest Phone Specs Database, 2020) https://phonedb.net/index.php?m=device&id=8249&c=yota_phone_2_yd205_lte&d=detailed_specs for reference), as well as into e-readers and tablets like the Norwegian-made ReMarkable. With e-ink passive display technology, the energy consumption compared to usual LCD and (O)LED technology is significantly lower, which is due to only having to power the switching operations when the displayed content changes. The state-of-the-art is greyscale perception of visual content, i.e. the passive displays are still monochromatic, as e-ink in RGB and pixels that then provide the usual colour spectrum, have been subject of much imagination and investigation, but to no avail.

Does that in a way mean there should be greyscale versions of whatever media content just as there are mobile/tablet/PC versions of websites for instance? Can or should such a greyscale version in turn encourage the user to fall back on it whenever appropriate, e.g. when mostly reading texts, such as prosa/literature, comments on SoMe, news on websites, instructions or static content, etc.?

On the other hand the human’s colour perception ability might be a strong pull for research on RGB e-ink. Should we strive to make interactions as similar as possible to what we have been used to, while only “going green” through the technology’s energy efficiency gain? What does the passive-ink imperative - “Consume no energy as long as the displayed content does not change!” - mean for interactions like scrolling then?

In general, consider the raised interaction issues and thought provocations under the question: How do they resonate with the Performance vs. Preference design principle (see picture)?

Step AA

ANSWER&ASK

Step AA

ANSWER&ASK

The greyscale e-ink example from before is one of hardware limitations determining usability. Also in other cases hardware and software hang closely together, to provide (tangible) interactions. Another example, where at least a software solution can fix a hardware bug is assistive touch that can be used when the home or lock-screen button won’t be used (either because of the user’s abilities, because there is no more such button as with newest generation smartphones, or because the button is broken).

“Step 6: Broken Home Button or Lock Button?

If your home button or lock screen button stopped working, this will really help you! Go to settings and press on “general” after that scroll down to the bottom and press “Accessibility.” Scroll to the bottom in the Physical & Motor and press “Assistivetouch” then turn that on. Then there will be a little button that works as a lock screen/home button.(https://www.instructables.com/iPhone-iPod-Secrets/)

From the same instructable one might learn there is even a gesture for zooming with one finger only (in case one has only one available or might even deploy other body parts, such as the nose). In general, we might understand people as being situationally (dis)abled to interact with technology, such as a mobile phone. And indeed, the user her/himself can be the bug, e.g. when dropping the phone causing minor or major hardware injuries that disrupt the usual use. As a technology fix, extra hardware has been developed as a ‘phone drop saviour’, e.g. spider legs that unfold in case of a fall, as pictured.

https://www.drcommodore.it/2018/06/30/ecco-lairbag-per-iphone/

https://www.drcommodore.it/2018/06/30/ecco-lairbag-per-iphone/

Furthermore, the user can be decisive in the phone and its interactions to work, when (s)he forgets to charge it or uses it in places with little or lacking charging opportunities. The easiest hardware fix here seems to be carrying either a power bank or a large-battery phone model with you. But what is the software fix here? Given that the availability of recharge is low, a low-power mode for keeping up very basic phone functions (clock, position tracking/compass, emergency calls, etc.), seems plausible, such as is the case with phone battery saving mode for below 20% charge. Making it possible to significantly stretch the battery charge in the situation of need might be an incentive to redesign such power modes a phone could uphold, thinking of a bit bigger applications with for instance vacation/do not disturb mode (yet staying reachable in case of an emergency), hiking/navigation mode, sleep mode, … you name it.

All the above exemplifies different use patterns the phone-user interaction could reveal. Use patterns (habits) could in turn offer a or several output-pull(s) to put first and begin (re)design with.

On another occasion you might further think about how these examples and reflections on output pull(s) based on user preferences resonate with the Progressive Disclosure design principle (see picture).

Let’s take here two concrete examples of possible output pulls. You can later reflect on whether maybe they are input pushes in disguise, but first assume, these alternative interactions are somehow desired (which will we more or less argued for below):

Number One suggestion stems from a phone design concept that is called cicret bracelet, which is a smartphone without display but a skin-projection screen that supposedly also employs touch technology (on the skin), mediated through the projection technology. Like with keyboard projection (see https://en.wikipedia.org/wiki/Projection_keyboard, or a more recent application https://www.kickstarter.com/projects/805472788/masterkey-40), you can also think of projection to other surfaces, e.g. as done for GoogleGlass onto glasses in front of your eye(s) (see https://www.google.com/glass/start/).

Does such an interaction-side pull, that we want something displayed for our eyes to perceive, justify the means, i.e. the materials and energy we have to put in? Is the input side - so projection and projective touch technology as well as the light (energy) in use - worthy of being preferred over former display technology, e.g. LCD and/or (O)LED? In other words, may the projection be significantly more effective, i.e. sufficient and thus sustainable?

From reading about minimalist phones like Light or Punkt at http://www.bbc.com/future/story/20180814-the-new-phones-that-are-stuck-in-the-past) designers and developers also get a call to “do not make smartphones to wearables (i.e. smartwatches) in a ‘chronos’ manner but a ‘kairos’ manner”. How does that resonate with this first suggestion here to consider such “miniaturized” technology (that fits in a bracelet or glasses-frame) with only “projected” screens?

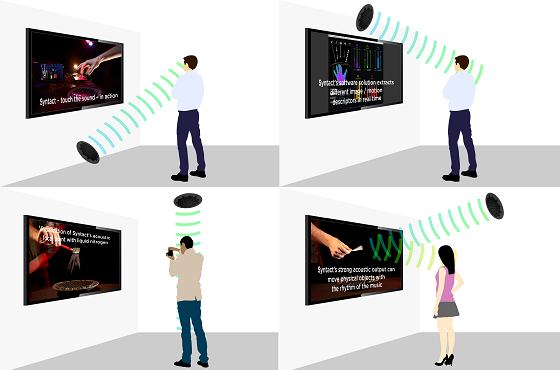

Number Two suggestion, that could be an output pull, concerns audible aspects of using a mobile phone (or in a wider sense audio-electronics). The interaction-side pull here is, that whatever structure/technology is used, it shall provide audible content to the human ear. As with many phone calls (or even meetings on digital conferencing platforms) the situation has often been that a user would want some privacy: Others in the surroundings should not or would not want to listen. With headphones or headsets this is achievable for the incoming talk/sound, but not quite for the outgoing talk/sound. A futuristic technology allows to create so-called audio spotlights through beaming the sound to certain places in a room, the technology is called audiobeam and has been developed by Sennheiser (see https://av.loyola.com/products/audio/pdf/audiobeam.pdf)

“AudioBeam is a new type of directional loudspeaker that is able to focus sound like the light beam of a torch, creating an audio spotlight for museums, exhibitions, theme parks and many other applications. Unlike conventional loudspeakers, which are only able to focus sound at high frequencies, AudioBeam emits sound as a lobe that can be precisely directed and is reflected by objects.

AudioBeam works with ultra-sound, modulating the audible sound onto an ultrasonic carrier frequency, much like a radio station does, and then emitting this signal via 150 special piezoelectric pressure transducers. Audible sound is only generated at a distance from the AudioBeam, when the signal is demodulated because of the non-linearity of air. This can be imagined like the creation of many small, virtual loudspeakers in the ultrasonic zone.

No sound is audible beside or behind an AudioBeam—you will only hear the audio information if you are directly within the sound beam, or if the sound is reflected by a smooth surface. This makes AudioBeam an ideal tool for information terminals, exhibitions, or even conferences where various AudioBeams emit several languages to a defined part of the auditorium.

AudioBeam is fitted with a sensor that switches the loudspeaker off when it registers movement within four yards. AudioBeam is an ideal tool for theme parks where it creates amazing effects with moving sound when combined with a small stepper motor. In an exhibition, AudioBeam will give explanations on an exhibit, which are only audible within a defined area. When mounted opposite an exhibit, the work of art will seem to “talk” to the visitors, as the ultrasonic beam is reflected by smooth surfaces. PC users can forget all about headphones. AudioBeam is their personal loudspeaker—without disturbing others in the office. A very discreet way of addressing customers—the sound will only reach the person directly in front of the machine. AudioBeam can be used to directly address an individual person within a safety area—putting them in an audio spotlight.»

Furthermore, imagination allows to think this audio spotlight in reverse, when human speech shall for instance operate a mobile phone or be transmitted through the mobile phone, yet nobody else is interested to perceive such “noise” in the immediate surroundings. With the human voices on this input side it definitely remains a task for R&D to somehow focus the human sound beam and cancel it out, where it won’t belong (e.g. with noise cancelling technology?). May it be the case that this noise/disturbance for others is holding us back in further developing speech-based interaction with the mobile phone or electronic devices in general?

On a last note, how would we designers, engineers, developers make use of biomimicry in the output-pull devoted interaction design endeavours introduced here? Biomimicry, or bionics, are interestingly used in hardware, structural and motion-related terms to “copy” and apply principles from nature (e.g. (Faludi et al., 2020) study the application of such methods). Could biomimicry also “go digital”, and in case it would, how?

Step AA

ANSWER&ASK

References

Barati, B., & Karana, E. (2019). Affordances as Materials Potential: What Design Can Do for Materials Development. 13(3), 19.

Coombs, G., McNamara, A., & Sade, G. (2018). Undesign: Critical Practices at the Intersection of Art and Design. Routledge. https://doi.org/10.4324/9781315526379

Faludi, J., Yiu, F., & Agogino, A. (2020). Where do professionals find sustainability and innovation value? Empirical tests of three sustainable design methods. Design Science, 6. https://doi.org/10.1017/dsj.2020.17

Folkmann, M. (2012). The aesthetics of immateriality in design: Smartphones as digital design artifacts. Design and Semantics of Form and Movement. DeSForM 2012: MEANING.MATTER.MAKING, 137–147.

Junge, I. P. (2021). Single Use Goes Circular–An ICT Proto-Practice for a Sustainable Circular Economy Future. Journal of Sustainability Research, 3(1). https://doi.org/10.20900/jsr20210009

Peer Reviewed: Design Through the 12 Principles of Green Engineering. (2003). Environmental Science & Technology, 37(5), 94A-101A. https://doi.org/10.1021/es032373g

PhoneDB - The Largest Phone Specs Database. (2020). http://phonedb.net/

Pierce, J. (2012). Undesigning technology: Considering the negation of design by design. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 957–966. https://doi.org/10.1145/2207676.2208540

Tischner, U., & Hora, M. (2012). 19—Sustainable electronic product design. In Waste Electrical and Electronic Equipment (WEEE) Handbook (pp. 405–441). Woodhead Publishing. https://doi.org/10.1533/9780857096333.4.405

van der Velden, M. (2010). Undesigning Culture: A brief reflection on design as ethical practice. Cultural Attitudes towards Technology and Communication 2010.

Step P

PROTOTYPE

Create a provocative prototype or mock-up that tells:

- how mobile devices might be designed from an interaction angle in the future, utilizing an output-pull approach,

- how the interaction with a so far only greyscale passive e-ink display (or similarly “situationally disabling” technology) may serve new understandings of sustainable/energy-efficient/sufficient technology versus the use(r), usability and the human desire to consume,

- whether and how interaction-pulled (or research or market pushed?) technology might influence or intervene the transition to a future of sustainable societies.

Supplies you might want to use, you see to the left and will be handed over to you. Feel free to prototype or test with what you consider suitable, it does not have to be "rocket science", but speculative/critical design.

FINALE: INTEGRATE

On the game board all participant paths meet in the middle (the game boards center that says ‘integrate’). After the individual participation phase we get to hear what other participants have come up with and how all participants could collaborate on the desirable futures we want to create.

Congratulations! With your participation we support digital materials development beyond “coming up with” application ideas.