Content:

- The Concept

- System Operation

- Technical Information

- Interactive Installations

- Related Publications

- Contact

The Concept

A multi-track looping session is a musical performance usually improvised; that is, music is created live, in which several audio clips, or “tracks”, are recorded and then repeatedly played in a loop. Every track can be seen as a separate musician; for example, one track can be dedicated to drums, another for guitar, another for vocals, etc. In a multi-track looping session, the “performer” could record a short drum loop and play it back, then a guitar loop, then a vocal loop, until all of them are playing back together in unison, creating a full song piece. In the session, the performer can work individually on every track and come back to a previous one to make modifications if needed. This process is typically done in music software like Ableton Live and with conventional music controllers (keyboards, knobs, buttons, etc.). This video shows an example of this way to create music: TecBeatz Push 2 Performance.

To leverage this experience, we design and implement a novel system for a multi-track looping session, in which every track is embodied as an interactive sound source that can be visualized and heard in the physical space where the performance is taking place; additionally, every sound source is able to act autonomously in terms of motion and music composition; that is, a sound source is an “agent” that can, eventually, move by itself in space and change the music material of the track it represents. As each agent is capable of being spatially “aware” of the performer and other agents, they can move as an artificial swarm that utilizes this information to navigate the area. This is why this system is referred to as a "human-swarm" interactive tool.

To make this experience possible, the system is built under a physical-virtual environment by using “Extended Reality (XR)” and “Spatial Audio” technologies to have interactive virtual objects shown in the physical room. Particularly, we use special “XR headsets” for visualization (e.g. Microsoft HoloLens and the Meta Quest 3), a “multi-speaker spatial audio setup” for 3D sound, and, in an early version, an “optical motion capture system” for tracking a physical object that allows moving a virtual object. The user is able to play the loops using a traditional keyboard MIDI controller connected to the system and use gestures to interact with the agents.

System Operation

The performer creates a “track” or “sound source” by playing and recording a musical line using the keyboard MIDI controller and modifying the sound's properties using filters and effects through physical knobs. The track is recorded as a loop; meaning that the musical material is being repeated constantly. This track is known as a “musical agent” and can be manually moved through a physical object (using a motion capture system in the early version) or the XR headset tracking capabilities (using the Meta Quest 3 headset for the current version). The spatial audio system maps the sound source position to a loudspeaker array (ambisonic system), and the XR headset renders the agent as a coloured sphere in the physical space.

Using specific gestures from the XR headset, the agent can be “released” so that it starts moving autonomously, changing the musical material (but keeping the sound properties) through a machine-learning algorithm fed with the musical line provided by the user. Upon releasing an agent, a new one appears, allowing the user to initialize it as a new track and then release it again. This process can be repeated several times to generate a multi-track session, with each looping track associated with a sphere-like agent travelling around the 3D audio-visual space.

As the agents move freely in the physical area, the user can also move around. The user can catch released agents to modify the musical loop and the sound properties, then release them once again in this human-swarm music interaction.

The following video shows a performance operating the early version of the system (using the Microsoft HoloLens 1 and a motion capture system). The current version (using only the Meta Quest 3 headset) was shown at IRCAM Forum Workshops 2024, a video is in the corresponding section of this article.

Technical Information

This system was developed for the master thesis:

A Human-Machine Music Performance System based on Autonomous Agents

The technical information and research using the early version (Microsoft HoloLens and Optical Motion Capture System) can be found in:

- Master thesis - A complete document about the design, implementation, and evaluation of the system.

- Scientific article at the NIME 2023 conference: A published research article based on the master thesis published in a music technology conference relevant to interactive music systems.

- A blog post from the MCT master programme at UiO.

The technical information for the current version (Meta Quest 3) will be released subsequently since it is still in development.

Interactive Installations

IRCAM Forum Workshops 2024

The current version of the system using the recently released Meta Quest 3 XR headset was presented at IRCAM (Institut de Recherche et de Coordination Acoustique/Musique) in Paris, France, at the event IRCAM Forum Workshops 2024, which took place from March 19 to 22, 2024.

IRCAM published an article prior to the event to allow attendees to familiarize themselves with the topic beforehand.

IRCAM article: https://forum.ircam.fr/article/detail/xr-human-swarm-interactive-music-system-1/

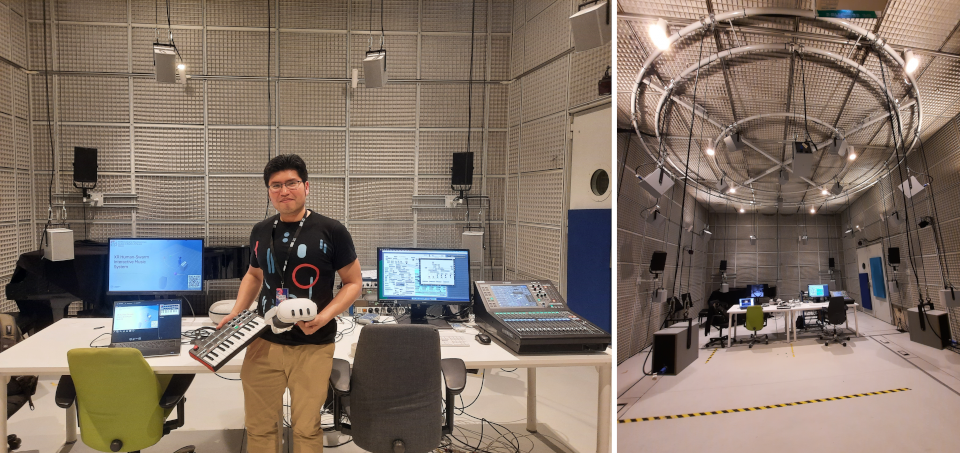

The system was set up in one of IRCAM's cutting-edge facilities (Studio 1). This studio has a spatial audio system with 24 loudspeakers and the necessary equipment to support 3D audio experiences.

The system was categorized as an “interactive installation” that ran for three hours. Attendees had the opportunity to try it. The following video shows some of them using the system and performing unique musical pieces.

The presentation dynamic was mostly about: describing the concept of the system, an overview of how it was developed and the research conducted, and a demonstration of how it operates. Then, people started to use it to perform their own musical improvisations.

The attendees of the event belonged to diverse backgrounds such as artists, technologists, and researchers who were interested in utilizing technology to create unique music experiences. As the audience had a mixed profile, it was important to provide information about the tools and the overall process of developing the system, rather than diving into deeper technical details, unless specifically requested through questions.

The impressions from users showed a strong interest in a familiar, and at the same time novel, approach to creating music through the embodiment of sound, particularly in how it could be incorporated into their own compositions.

Related Publications

- Lucas, P. (2022). A Human-Machine Music Performance System based on Autonomous Agents. Master thesis, University of Oslo https://www.duo.uio.no/handle/10852/96115

- Lucas, P. & Fasciani, S. (2023). A Human-Agents Music Performance System in an Extended Reality Environment. Proceedings of the International Conference on New Interfaces for Musical Expression. http://nime.org/proceedings/2023/nime2023_2.pdf