All of our work on Mixed Reality (MR) is associated with the AliceVision Association, which you can find on GitHub: https://alicevision.org. We make it affordable to create 3D environments from photos or videos, and to track the position of cameras while the move through a reconstructed environment.

One big challenge in rendering virtual elements into a real-world view to achieve Mixed Reality is a real-time understanding of depth from the user's point of view.

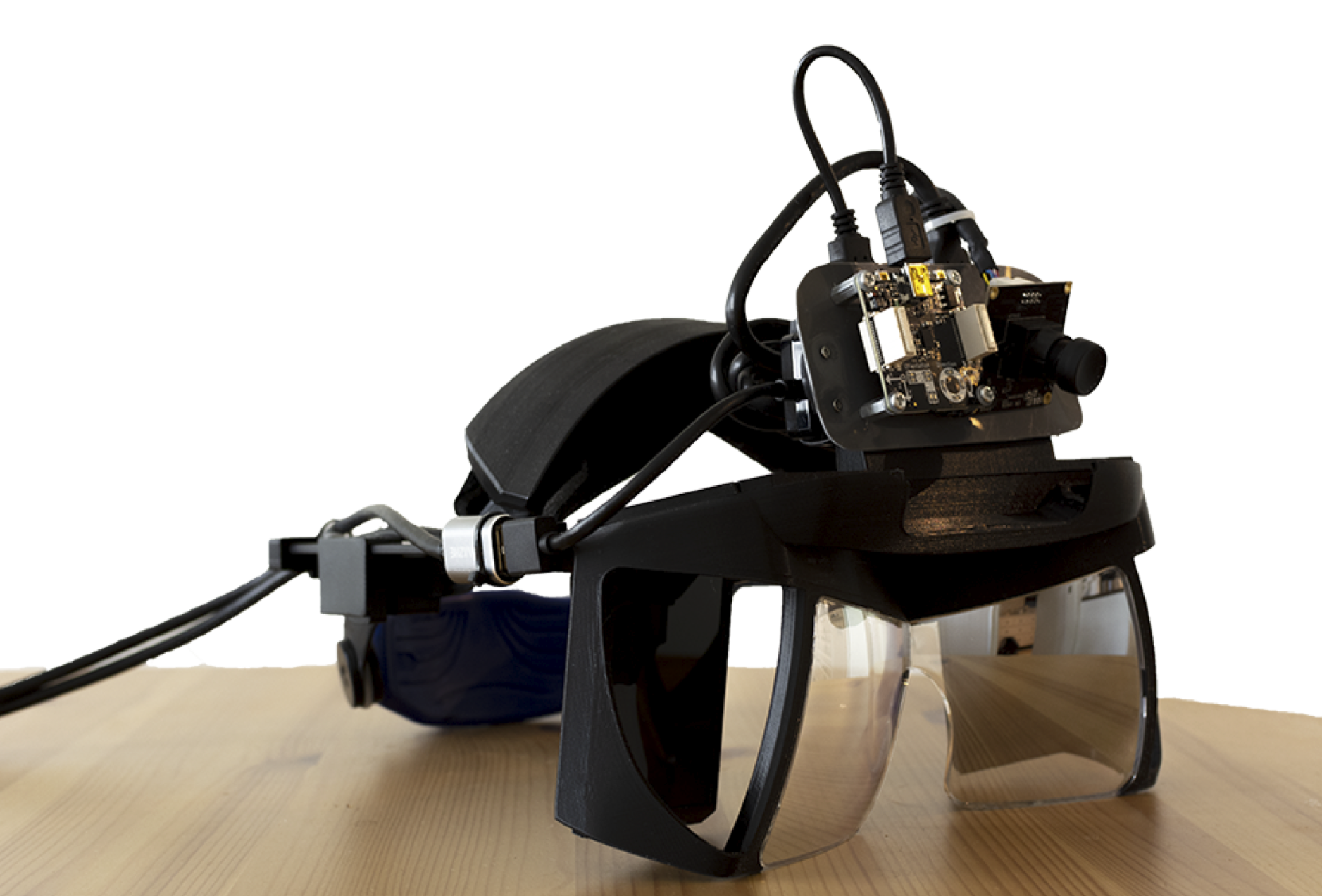

Let's say that the end-user is wearing an AR headset like our Project North Star head-mounted AR display.

The virtual elements should be placed in a natural position at their natural size into the user's field of view. This has in principle been solved by AR applications on the mobile phone. They are capable of understanding the device's movement and are fairly good at keeping the virtual object at the same location in real space. They do, however, miss an important capability. They are not able to figure out whether a real-world object should be in front of or behind the virtual object.

For this, we need a really fast segmentation of objects in the real world, to determine for every pixel in the MR overlay whether it should be in front of the real-world view, and whether it should be hidden.

In this thesis, we explore the option: Depth estimation using dual cameras and block matching

The simplest algorithms look for regions in two cameras' images that are very similar, matches them, and estimates their depth base on the edge pixels. This approach is supposed to be very fast, but it does not consider the case where surface and virtual objects intersect. Finding a means of extending this algorithm to suitable depth understanding is the topic for the first thesis idea.

The current state-of-the-art combines block matching with machine learning to establish an understanding of depth in a known kind of scene.