Typical mobile phone Augmented Reality (AR) applications are just rendering virtual objects into space at the intended position. If anyone walks in front of that intended position, these applications cannot deal with it - the person who should be walking in front of the virtual object are hidden under the object anyway. For a mobile phone that may be acceptable, but it is very irritating when you watch AR scene through a head-mounted display (HMD).

In recent theses, we have investigated how to use data acquired with a laser scanner (LiDAR) to accurately reconstruct a room.

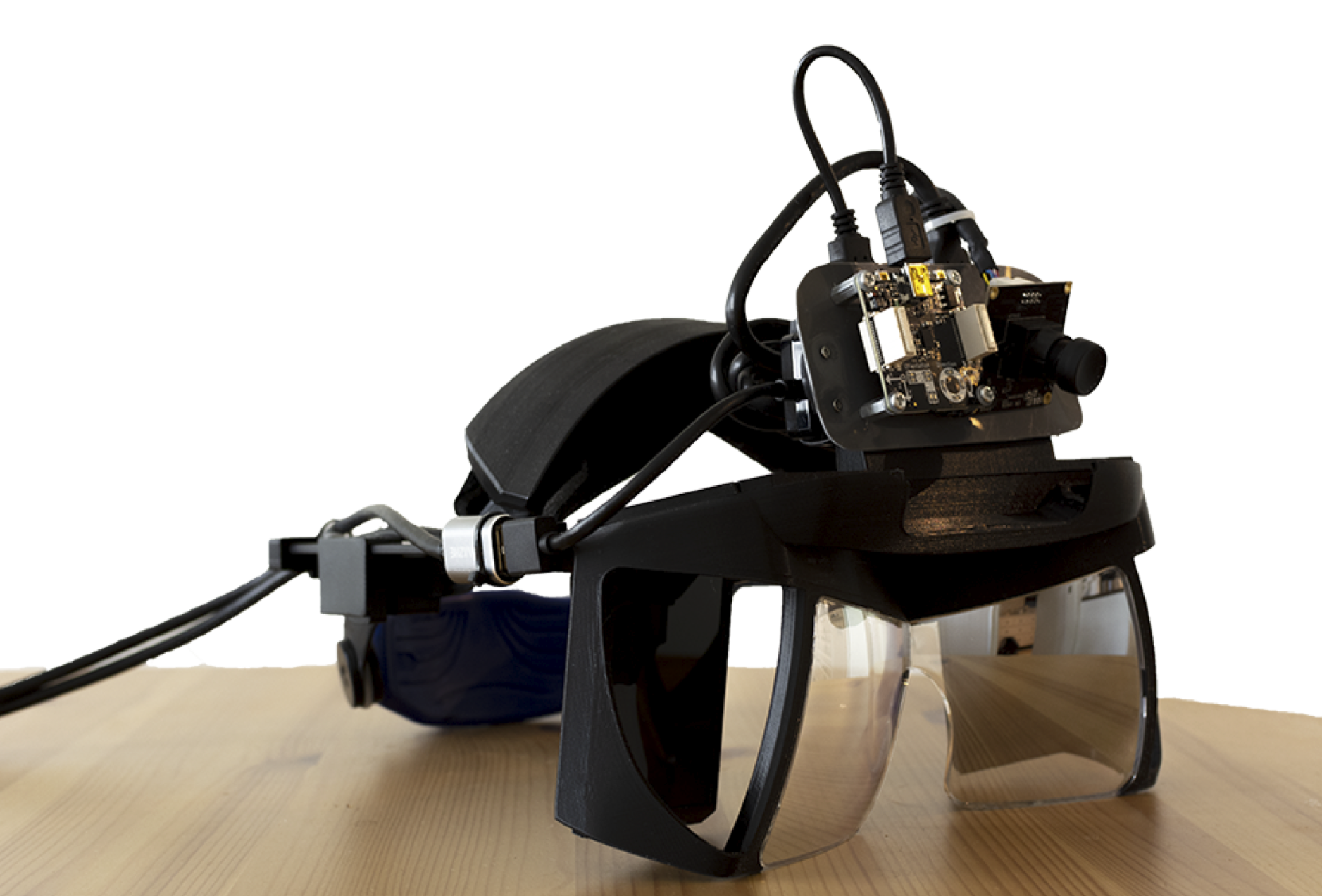

Based on this reconstruction, we want to know how we can place virtual objects into an augmented reality scene that is suitable for a head-mounted-display like the Project North Star headset in this image.

This comes with two main challenges:

- Determining in real-time where the user of the AR headset really is located and in which direction they are looking.

- Determining in real-time which objects in space are occluding the view onto the AR object(s) and how to remove those hidden pixels.

Conditions

Language used: C++