NLP has in recent years seen a renewed interest in vector space models that implement a distributional approach to lexical semantics. Distributional semantics refer to the notion that the meaning of a word can be inferred from it observed contexts of use. When such contextual use patterns of words are represented in a vector space model this is often referred to as semantic spaces, word space models, word embeddings, or simply word similarity models.

While off-the-shelf word vectors trained on large data sets are available for English (e.g. typically trained using toolkits such as word2vec or GloVe), such pre-trained models are not currently readily available for Norwegian. One of the aims of this project is to train and make available large-coverage semantic vectors for Norwegian, for example using texts from the Norwegian News Corpus (which would first need to be tokenized and lemmatized).

The second major aim of this project is to create gold-standard resources that facilitates the evaluation of such unsupervised semantic models. This is a topic that has recently sparked a lot interest in the NLP community, as witnesses by the fact that one of the major conferences in the field (ACL, 2016) this summer hosted the First Workshop on Evaluating Vector Space Representations for NLP. Again, no such resource is currently available for Norwegian. When creating an evaluation data-set for Norwegian, one could either start from scratch or one could choose to adapt one of the several resources that already exists for English. Some examples of data-sets and evaluation strategies are given below:

- The TOEFL data-set of Landauer and Dumais (1997) contains 80 questions consisting of a target word along with 4 candidate lexical substitutes. The task presented to the word space model is to correctly pick the candidate that is most similar to the target.

- WordSim-353 (Finkelstein et al., 2001) contains 353 English word pairs with manually similarity scores (an average of several judges), measuring the relatedness of each word pair on a scale from 0 (totally unrelated words) to 10 (closely related or identical). To evaluate a word similarity model against this resource one measures the correlation between the human judgments and the similarity scores computed by the model.

- While WordSim-353 to some degree focuses on relatedness or association, SimLex-999 is a more recent resource that also aims to capture similarity in a more strict sense (Hill, Reichart and Korhonen 2014). To illustrate the difference: While taxi and traffic are semantically related, taxi and cab are semantically similar.

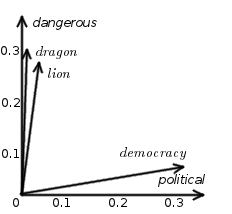

- A final benchmark worth mentioning is provided by the semantic and syntactic analogies data set described in the 2013 paper by Mikolov et al.; Linguistic Regularities in Continuous Space Word Representations. This data focuses on bilexical analogical relations that can either be semantic such as clothing is to shirt as dish is to bowl, or syntactic such car is to cars as apple is to apples.